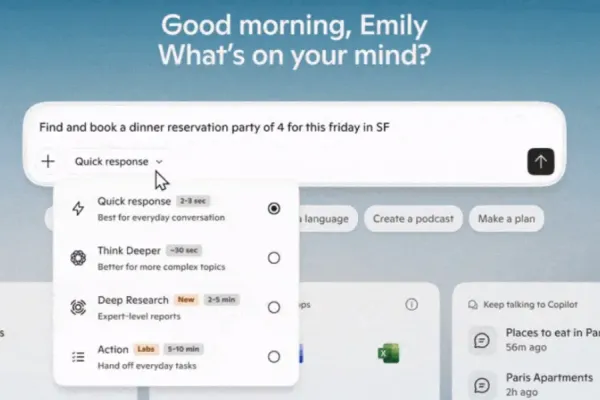

Microsoft has issued a warning about potential security risks associated with Copilot Actions, an experimental AI feature integrated into Windows. This set of features is designed to assist with tasks such as file organization and meeting scheduling, acting as a digital collaborator.

Security Concerns with AI Features

The company acknowledges that Copilot Actions can lead to security vulnerabilities, including data exfiltration and malicious code execution. Security experts emphasize the inherent risks in large language models (LLMs), pointing to issues like hallucinations, where models generate incorrect data, and prompt injection attacks that override agent instructions.

Microsoft specifies that Copilot Actions is experimental and switched off by default. Users should only activate it after understanding the potential risks, according to Microsoft's advisory.

Critics and Expert Insights

Critics argue Mcrosoft's warning is inadequate, likening it to past ignored alerts about Office macros, emphasizing that experimental features often transition into defaults without adequate security measures. According to security analysts, some users might inadvertently permit risky features amid unclear notifications, raising liability concerns.

These critiques extend to similar AI implementations from other technology firms, highlighting a broader industry issue.