Microsoft has introduced agentic AI capabilities for Windows 11, noting potential security risks including hallucinations and cross-prompt injection attacks.

Security Concerns Highlighted

According to Microsoft, the new AI can hallucinate, leading to security vulnerabilities. Such flaws can result in undesired actions like data exfiltration or malware installation.

- Cross-prompt injection exposes users to hidden commands in UI elements or documents.

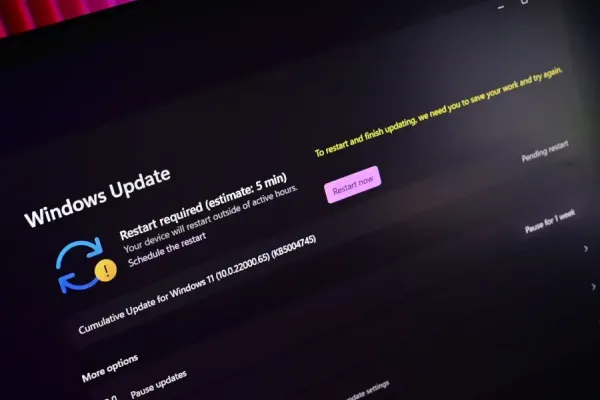

- Users are warned these agentic features are experimental and not enabled by default.

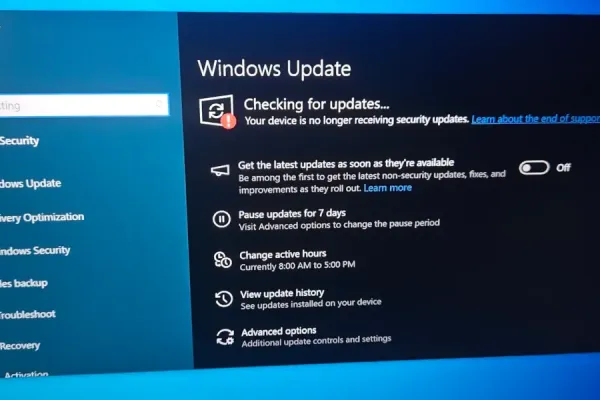

User Control and Data Privacy

The company has outlined three principles: transparency in agent actions, maintaining data security standards, and user approval for data queries.

Microsoft advises users to understand security implications before enabling the AI agent, noting these as aspirations rather than guarantees.

Market Impact and Criticism

This move reflects the growing trend of implementing AI despite known flaws, responding to competitive pressures in the tech industry.

Critics argue that transferring security risk assessment to users is complex and burdensome.